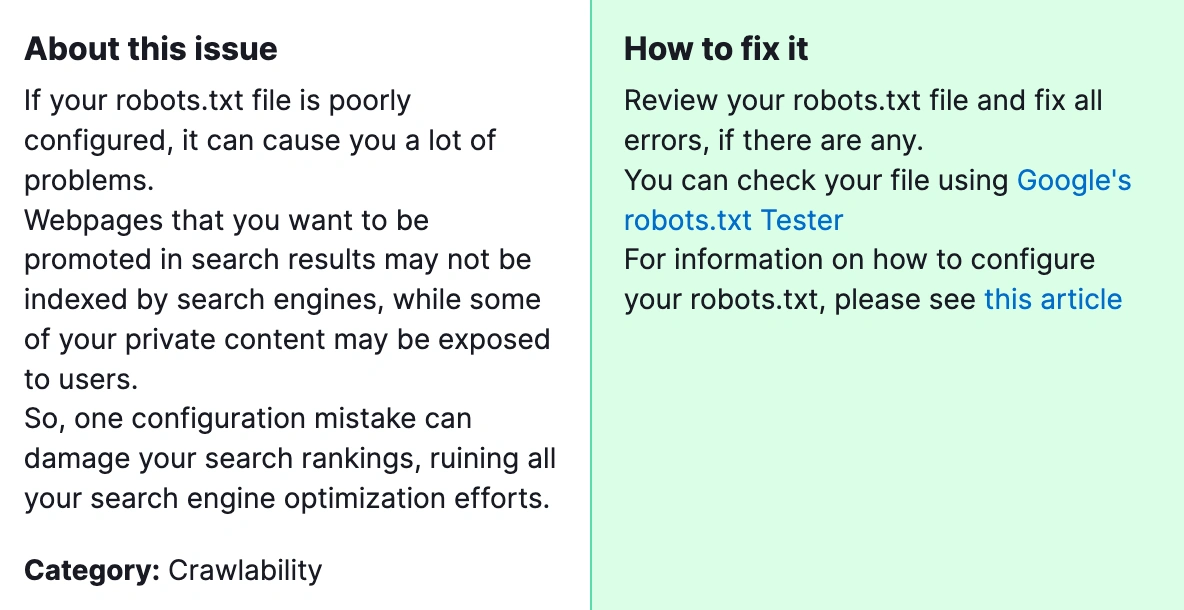

Common robots.txt issues and errors include:

-

Robots.txt not in the root directory: The file must be located in the root folder of your website to be detected by search engines. If placed in a subfolder, it will be ignored, causing your site to behave as if no robots.txt exists.

-

Syntax and format errors: These include incorrect use of directives, unsupported commands, or malformed lines. Such errors can cause search engines to misinterpret or ignore the file. Using a robots.txt validator tool helps identify and fix these issues.

-

Overly broad or incorrect blocking rules: For example, using

Disallow: /blocks the entire site, which is usually unintended. It’s important to specify precise paths to block only the intended content. -

Poor use of wildcards: Misusing wildcards can lead to unintended blocking or allowing of URLs.

-

Using

noindexin robots.txt: Google no longer supportsnoindexdirectives in robots.txt files since 2019. Pages should be controlled with meta-robots tags instead. -

Blocking important scripts and stylesheets: Blocking resources needed for rendering can negatively affect how search engines understand your pages.

-

No sitemap URL specified: Including a sitemap URL in robots.txt helps search engines discover your sitemap more easily.

-

Using absolute URLs: Robots.txt should use relative paths, not absolute URLs.

-

File not writable or editable: In some CMS setups (e.g., WordPress with Rank Math), the robots.txt file may not be writable due to permissions or configuration, requiring adjustments to allow editing.

How to troubleshoot and fix these issues:

-

Verify the file location: Ensure robots.txt is in the root directory of your website.

-

Check syntax: Use a robots.txt validator to detect format errors and unsupported directives.

-

Review blocking rules: Make sure

DisallowandAllowdirectives are correctly targeting intended URLs without overblocking. -

Avoid deprecated directives: Remove any

noindexlines from robots.txt and use meta tags for noindexing. -

Allow essential resources: Ensure scripts and stylesheets needed for page rendering are not blocked.

-

Add sitemap URL: Include a

Sitemap:directive pointing to your sitemap location. -

Fix file permissions: If the file is not writable, adjust server or CMS settings to allow editing.

-

Test changes: After updating, use tools like Google’s robots.txt Tester or Search Console to verify that the file works as intended.

Following these steps will help resolve common robots.txt problems that can negatively impact SEO and site indexing.

Maple Ranking offers the highest quality website traffic services in Canada. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 720 PHP per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation