Overview of Popular Generative AI Architectures

Generative AI models are designed to create new content, such as images, videos, or text, based on existing data. Three of the most popular architectures in this field are Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Transformers. Each has unique strengths and applications.

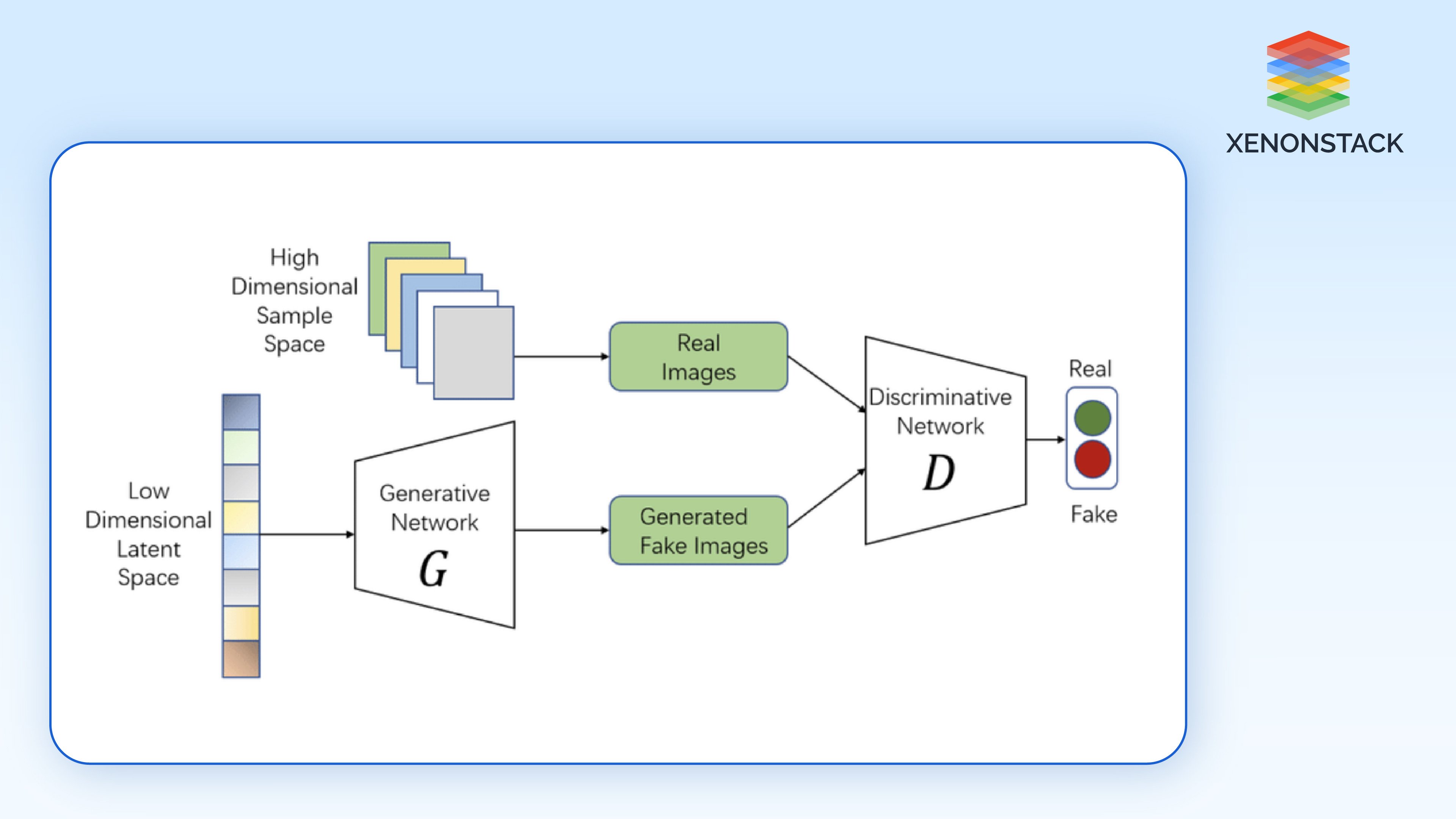

Generative Adversarial Networks (GANs)

GANs consist of two neural networks: a generator and a discriminator. The generator creates new data instances, while the discriminator evaluates these instances for authenticity. Through adversarial training, the generator improves its ability to produce realistic data, while the discriminator becomes better at distinguishing between real and fake data.

Key Features:

- Adversarial Training: The generator and discriminator compete against each other.

- Applications: Image generation, data augmentation, and style transfer.

- Strengths: Can produce highly realistic data.

- Limitations: Training can be unstable, and mode collapse may occur.

Variational Autoencoders (VAEs)

VAEs use an encoder-decoder architecture to generate new data. They encode input data into a latent space and then decode this representation to generate new data. VAEs introduce randomness in the encoding process, allowing for diverse yet similar data instances.

Key Features:

- Latent Space Representation: Encodes data into a compressed form.

- Applications: Image and video generation, anomaly detection.

- Strengths: Provides a probabilistic framework for data generation.

- Limitations: May not produce data as realistic as GANs.

Transformers

Transformers are primarily used for text generation tasks. They leverage self-attention mechanisms to consider the entire context of the input text, enabling them to generate coherent and contextually appropriate text.

Key Features:

- Self-Attention Mechanism: Allows for parallel processing of input sequences.

- Applications: Text generation, translation, and summarization.

- Strengths: Highly effective for sequential data tasks.

- Limitations: Not typically used for image or video generation.

Comparison of GANs, VAEs, and Transformers

| Architecture | Primary Use | Strengths | Limitations |

|---|---|---|---|

| GANs | Image/Video Generation | Highly Realistic Data | Unstable Training, Mode Collapse |

| VAEs | Image/Video Generation | Probabilistic Framework | Less Realistic Data |

| Transformers | Text Generation | Contextual Understanding, Parallel Processing | Limited to Sequential Data |

Each architecture has its unique applications and challenges, making them suitable for different tasks within the realm of generative AI.

Maple Ranking offers the highest quality website traffic services in Canada. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 720 PHP per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation