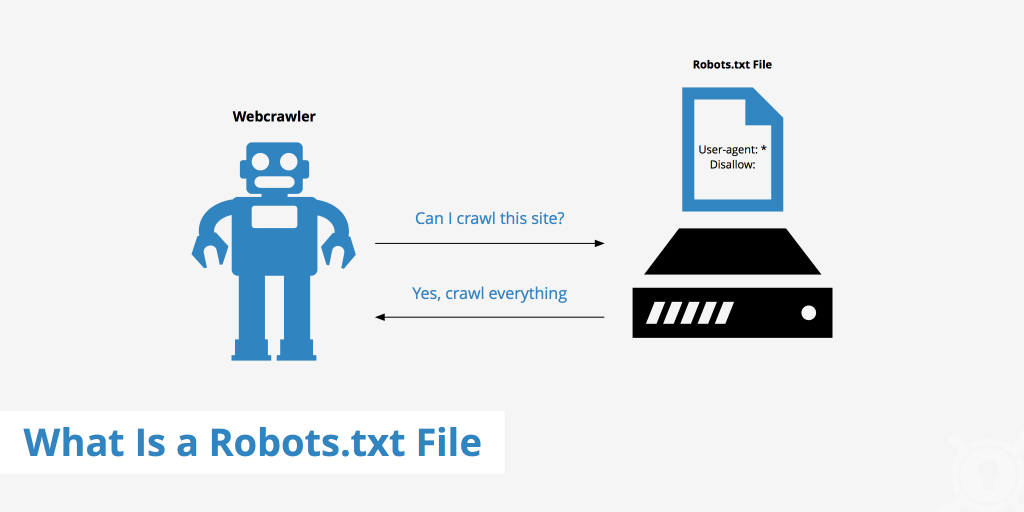

To optimize robots.txt and .htaccess files for security, you should use robots.txt primarily to guide search engine crawlers on what to index or avoid, but not to protect sensitive content, since robots.txt is publicly accessible and only a voluntary guideline for bots. Instead, use .htaccess to enforce actual access restrictions and server-level security.

Key points for optimization:

-

robots.txt:

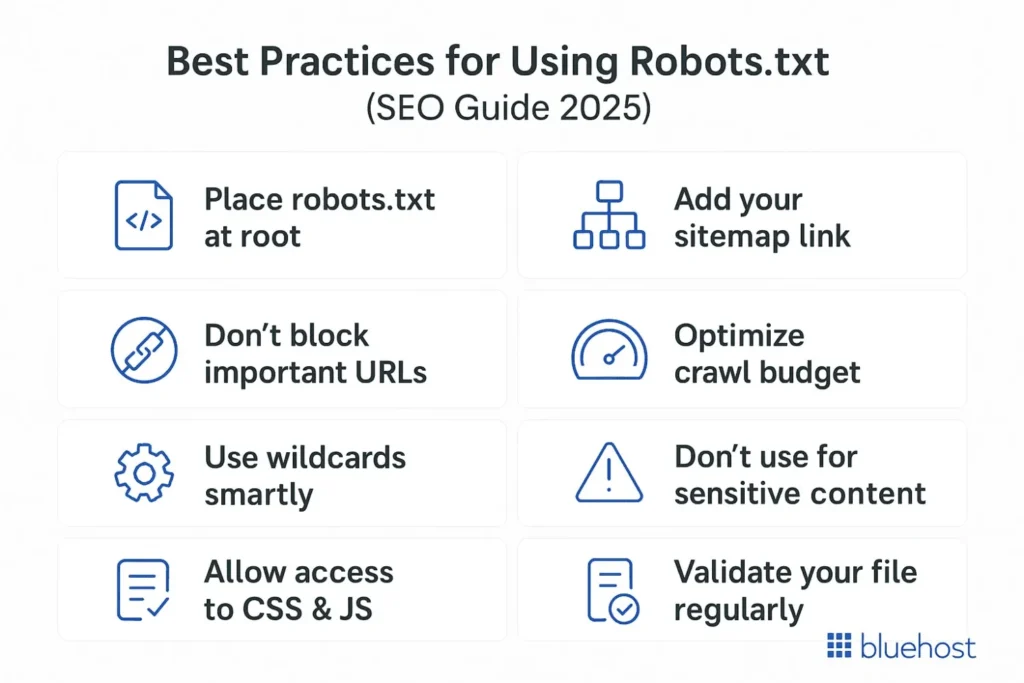

- Use it to disallow crawling of non-public or duplicate content such as admin pages, private directories, or staging areas to improve SEO and manage crawl budget.

- Avoid relying on robots.txt to hide sensitive or private content because it is publicly visible and can act as a "map" for attackers looking for hidden areas.

- Do not block URLs that use meta NOINDEX tags, as blocking them in robots.txt prevents search engines from seeing the NOINDEX directive, potentially causing unwanted indexing.

- Use wildcards carefully to avoid accidentally blocking important pages.

- Include sitemap location and crawl-delay directives to help search engines crawl efficiently.

-

.htaccess:

- Use it to enforce real security by restricting access to sensitive files, directories, or IP addresses.

- Block known malicious bots, specific IPs, or entire countries if your site serves a local audience.

- Implement password protection for truly private areas rather than relying on robots.txt.

- Use .htaccess to improve site speed with caching rules for images, CSS, and JavaScript.

- Deny access to sensitive files (e.g., configuration files) and prevent directory listing.

Together, robots.txt manages crawler behavior for SEO purposes, while .htaccess enforces server-level security and access control. Relying solely on robots.txt for security is ineffective and risky because it publicly exposes the paths you want to keep private.

Example robots.txt snippet for SEO and partial privacy:

User-agent: *

Disallow: /admin/

Disallow: /private/

Allow: /public/

Crawl-delay: 5

Sitemap: https://www.example.com/sitemap.xml

Example .htaccess rules for security:

# Block specific IP

<RequireAll>

Require all granted

Require not ip 123.45.67.89

</RequireAll>

# Password protect admin directory

<Directory "/var/www/html/admin">

AuthType Basic

AuthName "Restricted Area"

AuthUserFile /path/to/.htpasswd

Require valid-user

</Directory>

# Disable directory listing

Options -Indexes

# Cache static files

<FilesMatch "\.(jpg|jpeg|png|gif|css|js)$">

ExpiresActive On

ExpiresDefault "access plus 1 month"

</FilesMatch>

In summary, use robots.txt to guide search engines and manage crawl behavior but never to secure sensitive content, which should be protected via .htaccess or other server-side methods like authentication or firewall rules.

Maple Ranking offers the highest quality website traffic services in Canada. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 720 PHP per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation