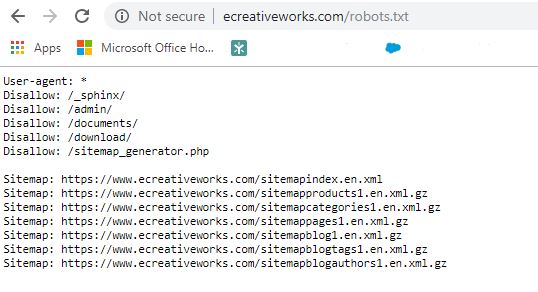

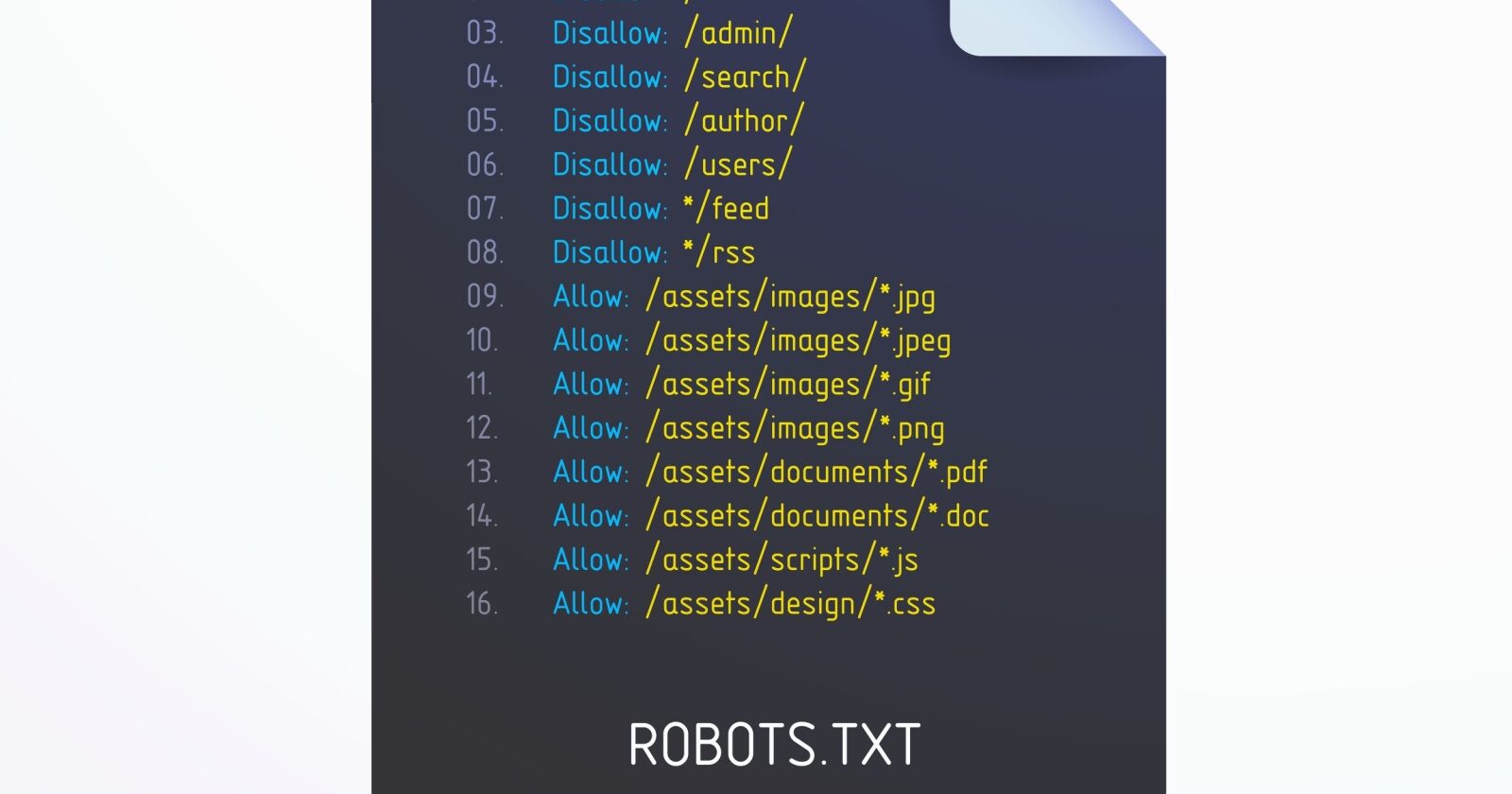

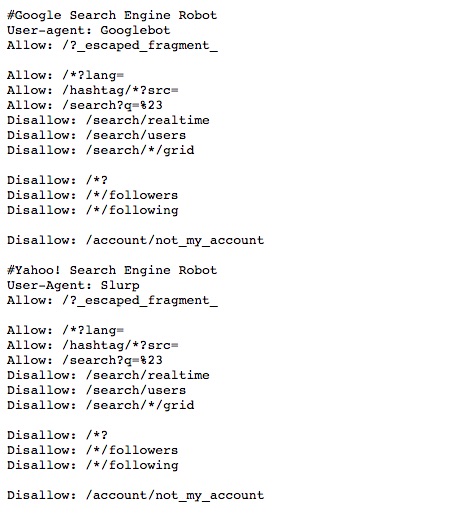

Here are examples of robots.txt files for different scenarios and user agents, illustrating common use cases and syntax:

1. Allow all web crawlers access to everything

User-agent: *

Disallow:

This tells all crawlers they can crawl the entire site without restrictions.

2. Block all web crawlers from the entire site

User-agent: *

Disallow: /

This blocks all crawlers from crawling any page on the site.

3. Block a specific user agent from a specific folder

User-agent: Googlebot

Disallow: /example-subfolder/

Only Google's crawler is blocked from crawling the specified folder; other crawlers can access it.

4. Block a specific user agent from a specific page

User-agent: Bingbot

Disallow: /example-subfolder/blocked-page.html

Only Bing's crawler is blocked from crawling a specific page.

5. Block multiple user agents from different folders

User-agent: Googlebot

Disallow: /admin/

User-agent: Slurp

Disallow: /private/

Googlebot is blocked from /admin/ and Yahoo's Slurp crawler is blocked from /private/.

6. Allow all except one user agent

User-agent: Googlebot

Disallow: /

User-agent: *

Disallow:

Blocks Googlebot from crawling the entire site but allows all other crawlers full access.

7. Sitemap declaration with access for all

User-agent: *

Disallow:

Sitemap: https://www.example.com/sitemap.xml

Allows all crawlers full access and provides the location of the sitemap for better indexing.

8. WordPress typical robots.txt

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Blocks crawlers from the WordPress admin area but allows access to the AJAX handler.

Notes on syntax and usage:

- User-agent: specifies which crawler the rules apply to (e.g.,

Googlebot,Bingbot, or*for all). - Disallow: specifies URL paths that crawlers should not access.

- Allow: can be used to override a disallow within a disallowed folder.

- The order of blocks matters; crawlers use the most specific matching user-agent block.

- Paths are case-sensitive.

- Sitemap location can be included to help crawlers find site structure.

These examples cover a range of common scenarios for controlling crawler access tailored to specific bots or site sections, useful for SEO and site management purposes.

Maple Ranking offers the highest quality website traffic services in Canada. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 720 PHP per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation