For multilingual and international websites, handling the robots.txt file effectively involves structuring it to optimize crawling while ensuring search engines can access all language versions appropriately.

Key points for managing robots.txt in this context:

-

Use different URLs for each language version (e.g., language-specific directories like

/en/,/fr/or subdomains likeen.example.com,fr.example.com). This allows clear separation of content by language, which is preferred over using cookies or browser settings to serve different languages on the same URL. -

Avoid blocking language-specific directories or subdomains in robots.txt unless you want to prevent crawling of certain language versions. Blocking these can prevent search engines from indexing those language versions, harming SEO and visibility.

-

Allow crawling of all language versions so that search engines can discover and index them. Use

Allowdirectives if you have previously disallowed broader sections but want to permit specific language pages. -

Do not mix languages on the same page and ensure each language version has a unique URL. This helps search engines correctly identify and rank pages by language.

-

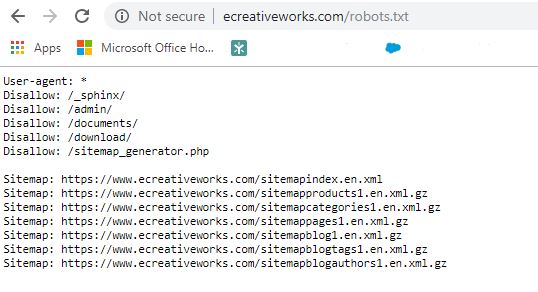

Use hreflang annotations on each page or via sitemaps to signal to search engines the relationship between language versions. This helps Google serve the correct language version in search results.

-

If you have identical content targeting different regions sharing the same language (e.g., English for US, Canada, UK), use canonical tags combined with hreflang to avoid duplicate content issues while indicating the preferred version.

-

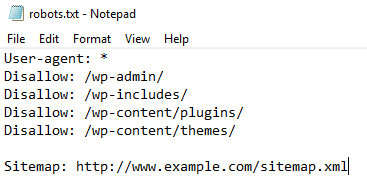

The robots.txt file syntax includes:

User-agentto specify which crawler the rule applies to.Disallowto block crawling of specific paths.Allowto permit crawling of specific pages or folders even if a broader path is disallowed.

In summary, for multilingual sites, the robots.txt should be configured to allow crawling of all language-specific URLs (whether directories or subdomains), avoid blocking language versions, and rely on hreflang and canonical tags to manage language and regional targeting rather than blocking via robots.txt. This ensures search engines can crawl, index, and serve the correct language versions effectively.

Maple Ranking offers the highest quality website traffic services in Canada. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 720 PHP per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation