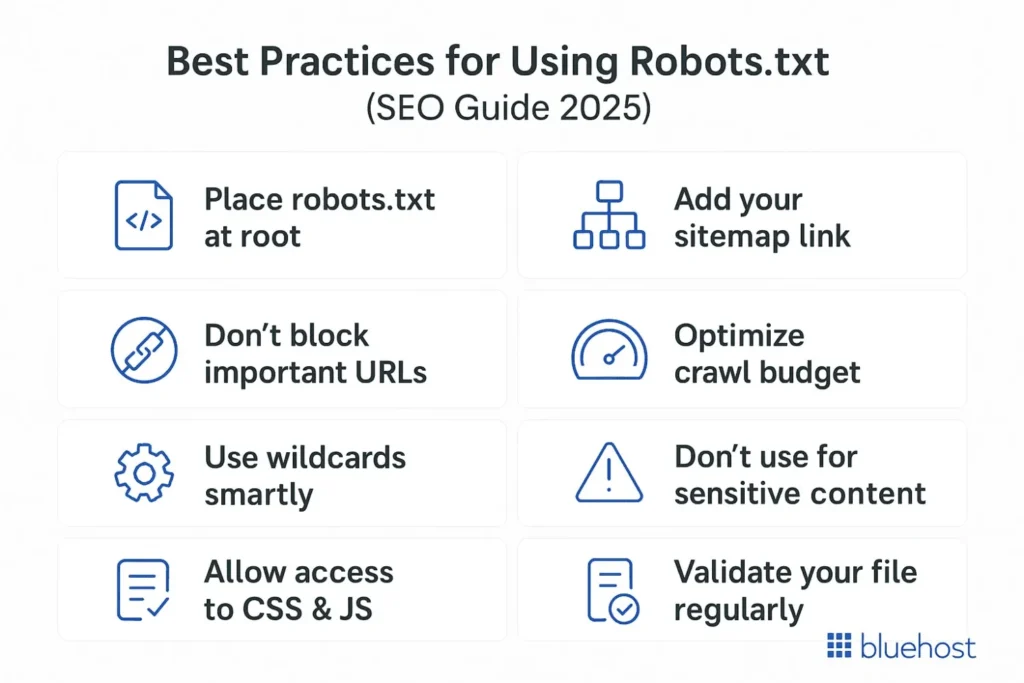

Advanced robots.txt techniques for managing crawl budget and blocking duplicate content focus on strategically controlling which URLs search engine crawlers can access, thereby optimizing crawl efficiency and improving SEO.

Key techniques include:

-

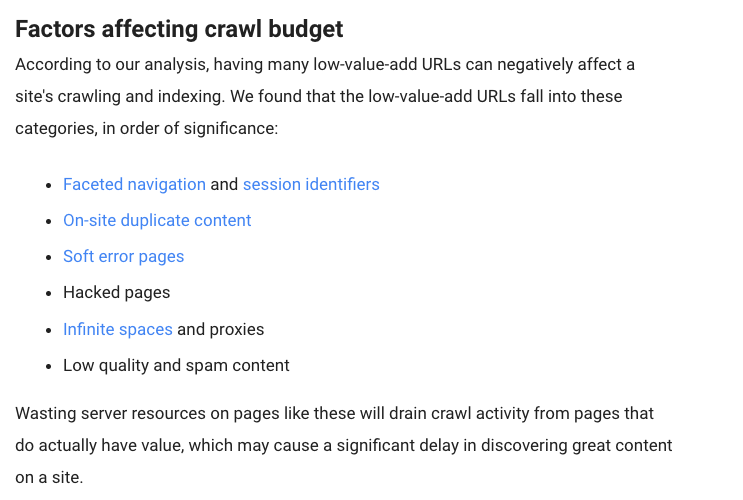

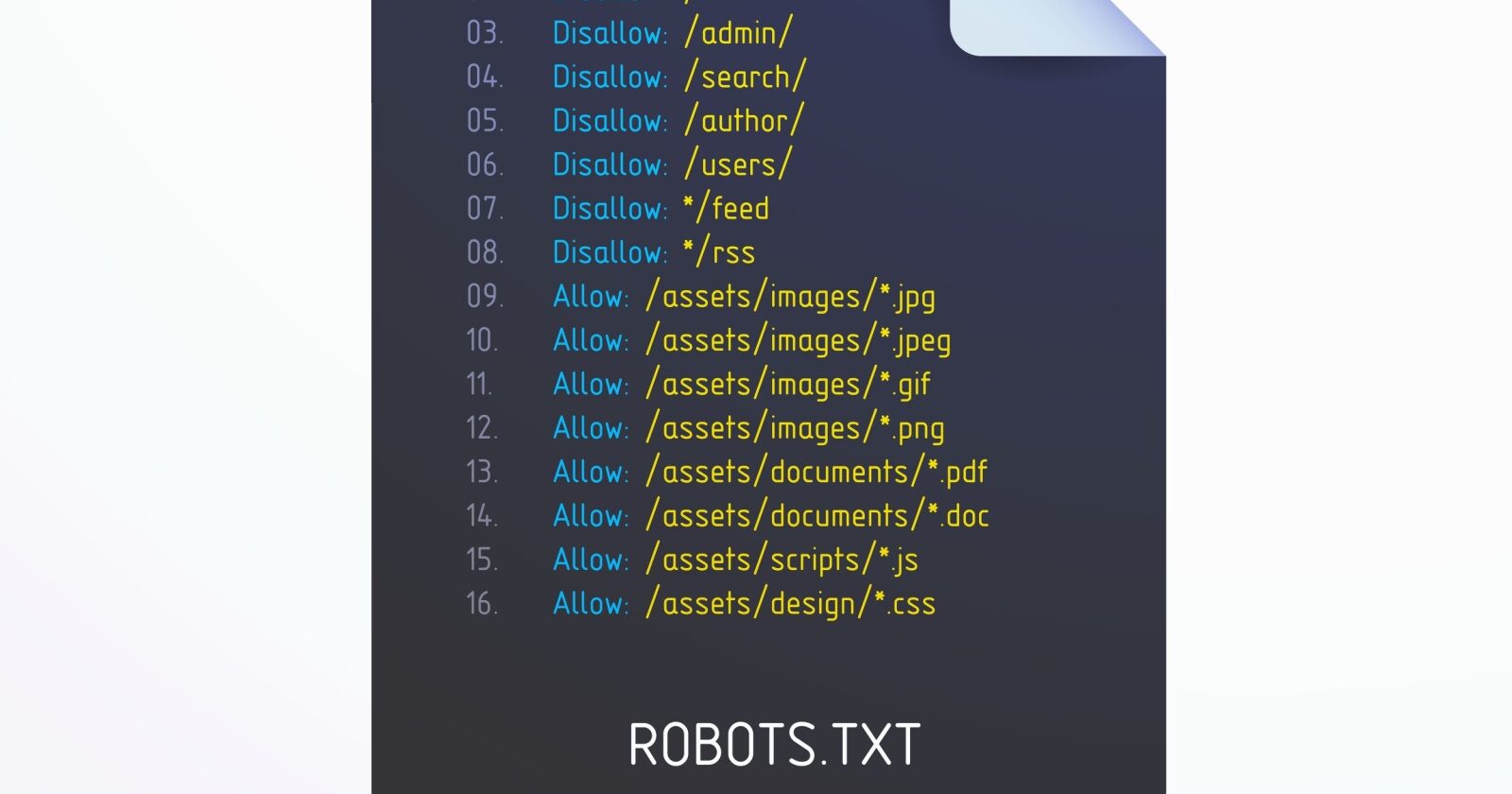

Disallow low-value or duplicate URLs: Use robots.txt to block crawling of URLs that generate duplicate content or have little SEO value, such as filtered product pages, session IDs, printer-friendly versions, or URLs with tracking parameters. This prevents crawlers from wasting crawl budget on redundant or non-essential pages.

-

Block query parameters: If your site uses many query string parameters (e.g., for filtering or sorting), disallow crawling of these parameterized URLs in robots.txt. This avoids creating a large number of near-duplicate URLs that consume crawl budget unnecessarily.

-

Avoid using robots.txt to block pages you want indexed: Robots.txt prevents crawling but not indexing if other signals point to the URL. For pages you want out of search results, use canonical tags or noindex meta tags instead. Robots.txt is best for blocking crawling of truly unimportant or duplicate content.

-

Organize URL structure and site architecture: A flat, logical site structure with clear navigation and hub pages helps crawlers find important content quickly, reducing wasted crawl budget on deep or orphaned pages. While not a robots.txt setting, this complements crawl budget management.

-

Combine robots.txt with canonical tags: Use robots.txt to block crawling of duplicate or parameterized URLs and canonical tags on the allowed URLs to consolidate ranking signals and avoid duplicate content issues.

-

Regularly audit and update robots.txt: As your site evolves, review which URLs should be blocked to keep crawl budget focused on high-value pages and avoid accidental blocking of important content.

In summary, advanced robots.txt management involves disallowing crawling of low-value, duplicate, or parameterized URLs, structuring your site for efficient crawling, and using canonical tags alongside robots.txt to consolidate duplicate content. This approach ensures search engines spend their crawl budget on your most important pages, improving indexing and SEO performance.

Maple Ranking offers the highest quality website traffic services in Canada. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 720 PHP per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation