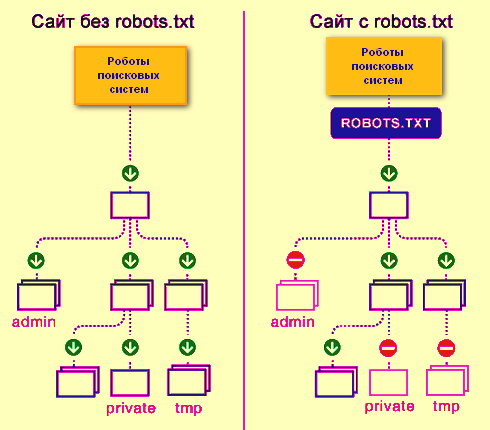

The common directives used in a robots.txt file are:

-

User-agent: Specifies which web crawlers (bots) the rules apply to. It can target a specific bot (e.g., Googlebot) or all bots using a wildcard (*).

-

Disallow: Tells the specified user-agent which paths or files it is not allowed to crawl. If a path is disallowed, the crawler should not access it.

-

Allow: Overrides a disallow directive to permit crawling of a specific path or file within a disallowed directory.

-

Sitemap: Provides the location of the website’s sitemap to help crawlers efficiently find and index pages.

These directives form groups in the robots.txt file, where each group starts with a user-agent line followed by allow or disallow rules for that agent. The syntax is case-sensitive for paths but not for directive names. Comments can be added using the # symbol.

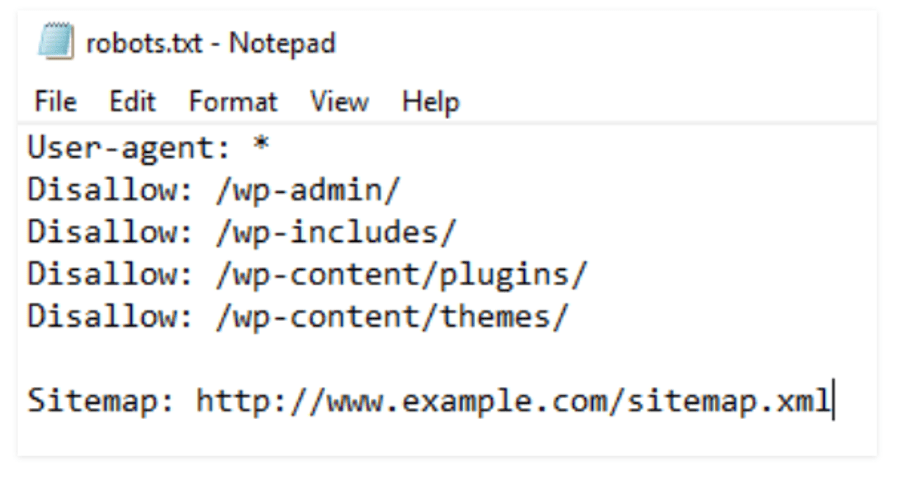

For example, a simple group might look like:

User-agent: *

Disallow: /private/

Allow: /private/public-info.html

Sitemap: https://example.com/sitemap.xml

This means all bots are disallowed from crawling the /private/ directory except the public-info.html file, and the sitemap location is provided for better crawling.

Maple Ranking offers the highest quality website traffic services in Canada. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 720 PHP per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation