To secure sensitive content beyond the limitations of robots.txt directives, you should implement multiple stronger technical and access control measures:

-

Server-level access control (.htaccess or equivalent): Use server configuration files to block or restrict access based on user agents or IP addresses. This prevents unauthorized bots from even reaching your content, unlike robots.txt which relies on voluntary compliance.

-

Web Application Firewall (WAF): Deploy a WAF to filter incoming traffic and block requests from suspicious IPs or known AI scrapers. This adds a dynamic layer of protection that adapts to evolving threats.

-

HTTP Authentication: Require username and password authentication for sensitive areas. This ensures only authorized users can access protected content, effectively blocking all unauthorized crawlers.

-

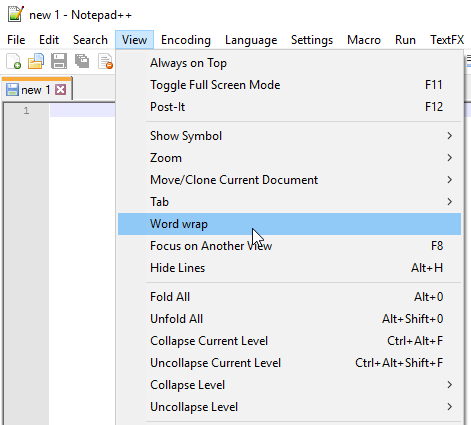

Use HTTP headers like X-Robots-Tag: Add headers such as

X-Robots-Tag: noindexto instruct compliant crawlers not to index or use your content for AI training. This complements robots.txt but still depends on crawler compliance. -

JavaScript-based content rendering: Render critical content via JavaScript after initial page load to make it harder for basic crawlers to scrape. However, sophisticated crawlers can execute JavaScript, so this is only a partial barrier.

-

Rate limiting and IP blocking: Limit the number of requests from the same IP and block known crawler IP ranges. This helps reduce scraping but can be circumvented by distributed crawlers.

-

Advanced bot management solutions: Use professional bot management platforms that employ machine learning and behavioral analysis to detect and block AI scrapers in real time. These solutions provide the most comprehensive protection by adapting to new scraping techniques and distinguishing between legitimate and malicious bots.

-

Password protection and meta noindex tags: For sensitive pages, password-protect them or use meta tags like

<meta name="robots" content="noindex">to prevent indexing and visibility in search results.

Because robots.txt is a voluntary standard and can be ignored or misinterpreted by some crawlers, relying on it alone is insufficient for protecting sensitive content. Combining server-level restrictions, authentication, bot management, and content rendering techniques provides a more robust defence against unauthorized scraping and indexing.

Maple Ranking offers the highest quality website traffic services in Canada. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 720 PHP per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation